Developed by Jennifer Docktor and Ken Heller

| Purpose |

To assess written solutions to problems given in undergraduate introductory physics courses.

|

|---|---|

| Format | Rubric |

| Duration | N/A min |

| Focus | Problem solving (Useful problem description, physics approach, specific application of physics, mathematical procedures, logical progression) |

| Level | Intro college, High school |

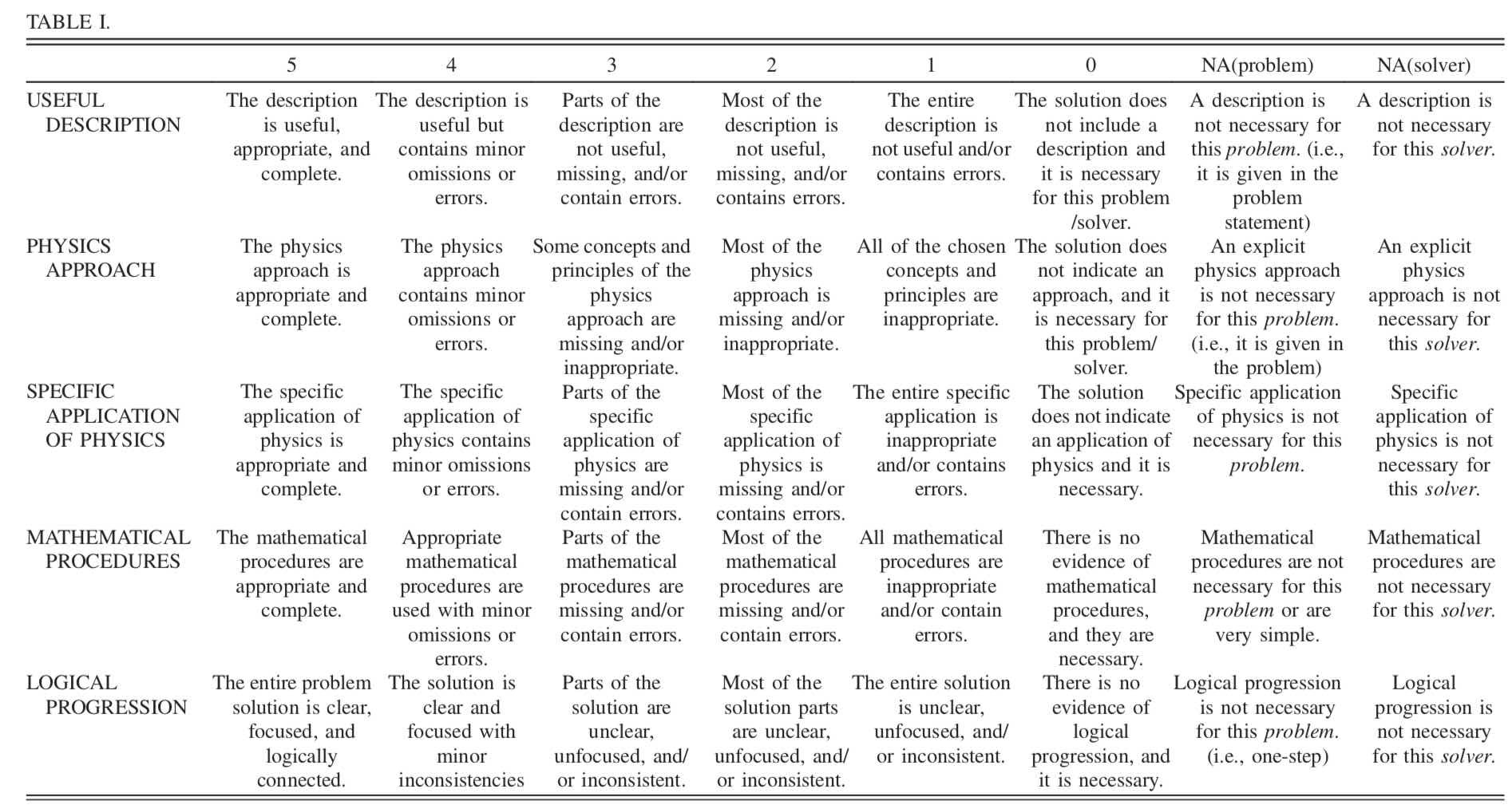

The full Minnesota Assessment of Problem Solving (MAPS) rubric from Docktor et al. 2016.

The developers' website contains more information about the MAPS rubric and training materials to help you learn how to use the rubric for general purposes or research purposes. The rubric training materials give examples of how to apply the rubric to student solutions to problems.

more details

This is the third highest level of research validation, corresponding to at least 3 of the validation categories below.

Research Validation Summary

Based on Research Into:

- Relevant theory and/or data

Studied Using:

- Iterative use of rubric

- Inter-rater reliability

- Expert review

Research Conducted:

- At multiple institutions

- By multiple research groups

- Peer-reviewed publication

The MAPS rubric is based on research on student problem solving at the University of Minnesota over many years. The MAPS rubric builds on previous work by attempting to simplify the rubric and adding more extensive tests of validity, reliability, and utility. The five problem-solving processes covered in the rubric are consistent with prior research on problem solving in physics (Docktor 2009). The validity, reliability, and utility of the rubric scores were studied in a number of different ways. Expert reviewers used the rubric to understand how rubric scores reflect the solvers process, the generalizability of the rubric as well as inter-rater agreement. Subsequent studies looked at the content relevance and representativeness, how the training materials influenced the inter-rater agreement and the reliability and utility of the rubric. Based on these studies, both the rubric and training materials were modified. The rubric was also studied using student interviews. Overall, the validity, reliability, and utility of the MAPS rubric were demonstrated with these studies. Research on the MAPS rubric is published in one dissertation, and one peer-reviewed publication.

References

- J. Docktor, Development and Validation of a Physics Problem-Solving Assessment Rubric, Dissertation, University of Minnesota, 2009.

- J. Docktor, J. Dornfeld, E. Frodermann, K. Heller, L. Hsu, K. Jackson, A. Mason, Q. Ryan, and J. Yang, Assessing student written problem solutions: A problem-solving rubric with application to introductory physics, Phys. Rev. Phys. Educ. Res. 12 (1), 010130 (2016).

- J. Docktor and K. Heller, Assessment of Student Problem Solving Processes, presented at the Physics Education Research Conference 2009, Ann Arbor, Michigan, 2009.

PhysPort provides translations of assessments as a service to our users, but does not endorse the accuracy or validity of translations. Assessments validated for one language and culture may not be valid for other languages and cultures.

| Language | Translator(s) | |

|---|---|---|

| Spanish | Nicolás Budini |

If you know of a translation that we don't have yet, or if you would like to translate this assessment, please contact us!

Download the MAPS scoring tool.

| Typical Results |

|---|

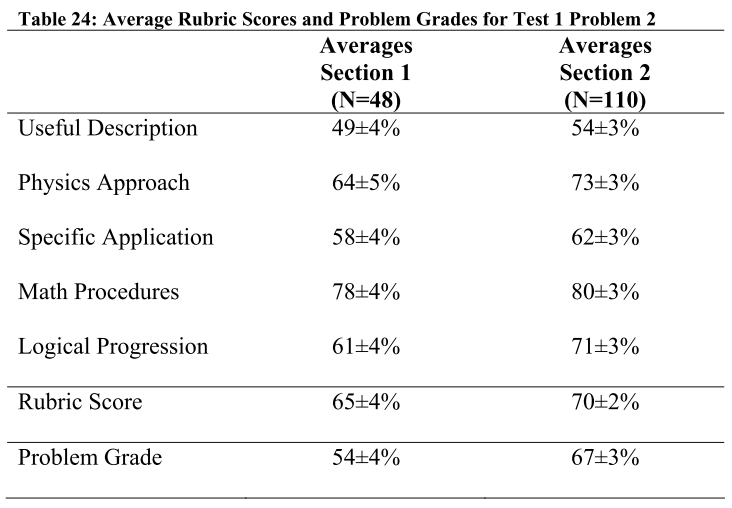

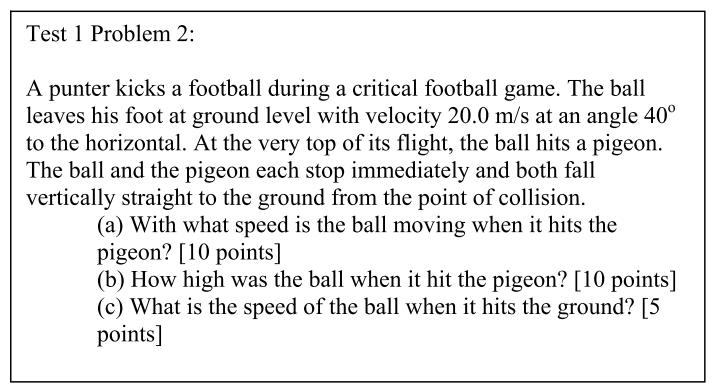

Because the MAPS is a rubric used to score physics problem solutions, there are no typical scores for this assessment. Here is an example of what the scores on this assessment look like (Table 24) for a specific physics problem (Test 1 Problem 2) for a specific group of students as reported in Docktor 2009.

|

The latest version of the MAPS, version 4.4, was released in 2008.