Developed by Stephanie V. Chasteen, Rachel E. Scherr, Monica Plisch, and the PhysTEC Project

| Purpose |

To characterize physics teacher education programs in order to provide guidance for self-improvement and enable comparisons among programs. |

|---|---|

| Format | Rubric |

| Duration | 60-120 min |

| Focus | Teaching (Institutional commitment, leadership and collaboration, recruitment, knowledge and skills for teaching physics, mentoring community and professional support, program assessment) |

| Level | Upper-level, Intermediate, Intro college |

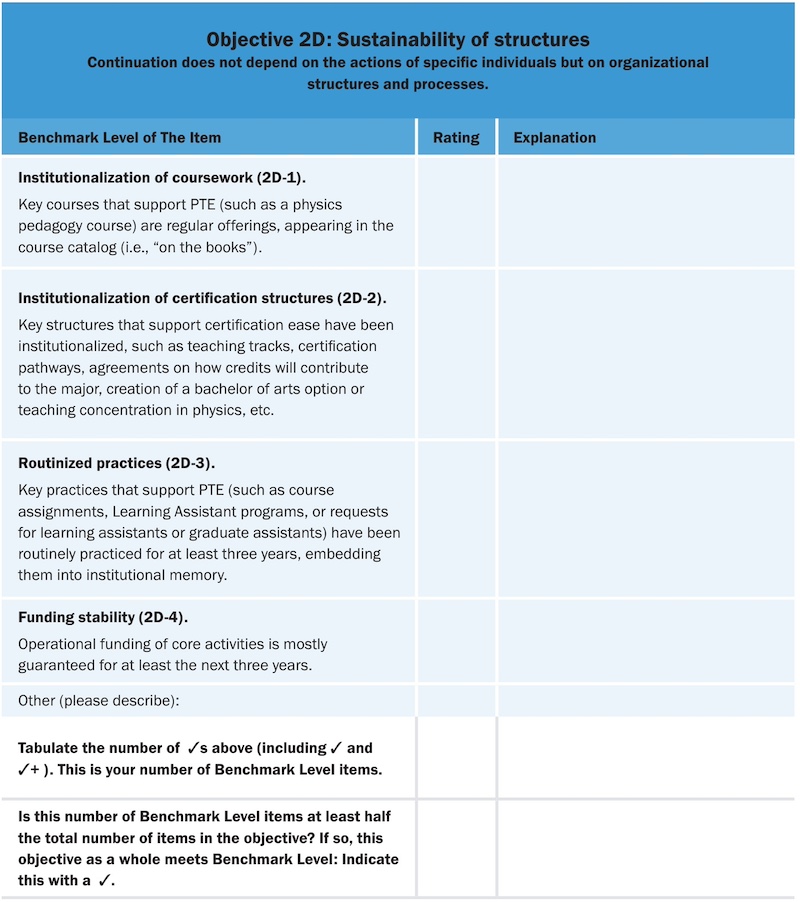

Example of PTEPA Rubric items for Objective 2D: Sustainability of structures:

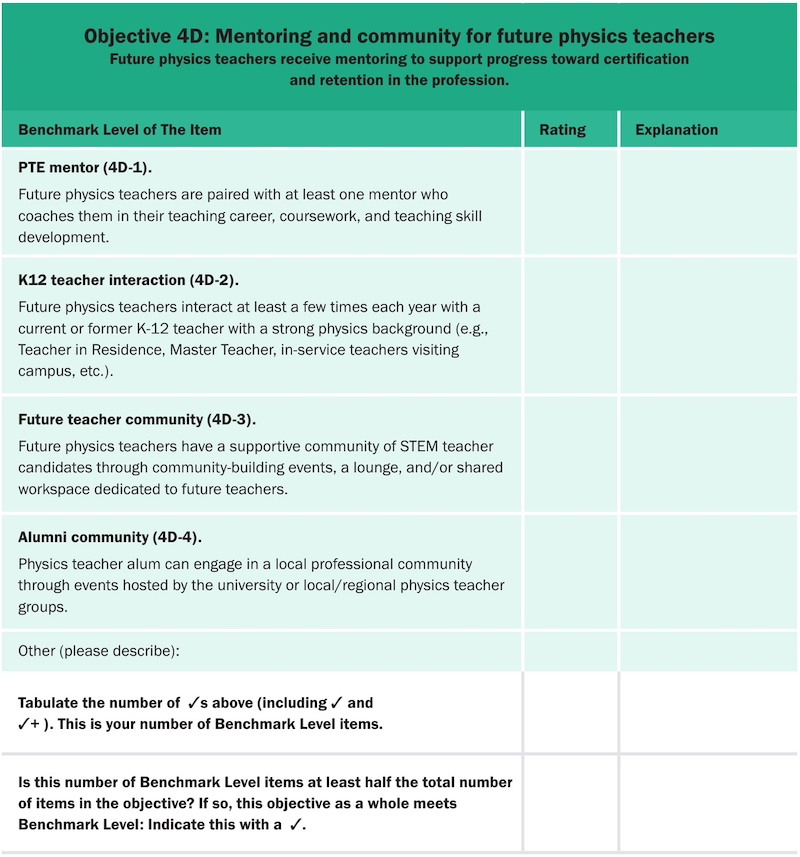

Example of PTEPA Rubric items for Objective 4D: Mentoring and community for future physics teachers:

PTEPA Rubric Implementation Guide

Everything you need to know about implementing the PTEPA Rubric in your class.

The developer's website contains much more information about the Thriving Programs Study and the Physics Teacher Education Program Analysis Rubric.

The PTEPA Rubric Developer’s Website includes:

- User’s Guide

- PDF and Excel versions of the PTEPA Rubric

- Full Report: A Study of Thriving Physics Teacher Education Programs

more details

This is the highest level of research validation, corresponding to all seven of the validation categories below.

Research Validation Summary

Based on Research Into:

- Relevant theory and/or data

Studied Using:

- Iterative use of rubric

- Inter-rater reliability

- Expert review

Research Conducted:

- At multiple institutions

- By multiple research groups

- Peer-reviewed publication

Through extensive engagement with theory, analysis of existing instruments, review of relevant studies, and direct observations of thriving programs, independent researchers and PhysTEC staff collaborated on the development of the PTEPA Rubric. The researchers conducted in-depth visits to eight thriving physics teacher education programs. Program visits were conducted either in-person or virtually, and each visit involved interviews with a wide variety of stakeholders, including program leaders, administrators, teachers, staff, and students. Analysis of the data from thriving programs contributed strongly to the development of the rubric, provided initial validation, and supported research findings. Review by nationally recognized experts in physics teacher education as well as extensive alignment with literature and accreditation processes established substantive validity, content validity, and face validity. During the development and validation process, the PTEPA Rubric was iteratively improved through over 20 versions to better reflect the practices and structures of diverse thriving physics teacher education programs.

References

- S. Chasteen and R. Scherr, Developing the Physics Teacher Education Program Analysis rubric: Measuring features of thriving programs, Phys. Rev. Phys. Educ. Res. 16 (1), 010115 (2020).

- R. Scherr and S. Chasteen, Development and validation of the Physics Teacher Education Program Analysis (PTEPA) Rubric, presented at the Physics Education Research Conference 2018, Washington, DC, 2018.

- R. Scherr and S. Chasteen, Initial findings of the Physics Teacher Education Program Analysis rubric: What do thriving programs do?, Phys. Rev. Phys. Educ. Res. 16 (1), 010116 (2020).

We don't have any translations of this assessment yet.

If you know of a translation that we don't have yet, or if you would like to translate this assessment, please contact us!

| Typical Results |

|---|

There are no typical scores on the PTEPA, as it is meant to be used for self-study to show you the strengths and weaknesses of your program. To see examples of results on Version 2.0 for high-producing physics teacher education programs, see the full report, A Study of Thriving Physics Teacher Education Programs. |

The most recent version of the PTEPA Rubric, released in 2024, is version 3.0. Version 2.0 was released in 2018. From 2018 - 2022, the PTEPA was used extensively by the PhysTEC community, and the evaluators analyzed the results for validity, reliability, and association with physics teacher graduation rates. Based on those results, this current rubric version is streamlined for the most essential elements and usability.

Updates in Version 3.0:

- Moved the name and focus from PTE “programs.” We changed the name from Physics Teacher Education Program Analysis Rubric to the Physics Teacher Education Progress Assessment, reflecting a shift away from thinking about PTE as necessarily occurring within a well-defined PTE program.

- Used the Chasteen-Lau structure. We organized the top-level categories to align with the Chasteen-Lau framework, which was validated as a model of sustainable physics teacher education at institutions.

- Used “goals” and “objectives.” We used the more familiar terminology of “goals” and “objectives” to describe the aims of physics teacher education efforts (instead of the original accreditation-oriented language of “standards” and “components”). We used four objectives per goal for consistency of scoring and validity.

- Reduced to one level. We now show only the desired (Benchmark Level instead of four Levels (Not Present, Developing, Benchmark, Exemplary). This presentation is more straightforward and more effective for action planning. Users then rate only on whether they achieved the desired Benchmark Level or are slightly above or below it rather than selecting among four distinct ratings.

- Provided targets. We identified an explicit target of “achieving 50% of the elements at least Benchmark Level or above in each objective and goal” based on regression studies showing this is predictive of long-term success.

- Modified items. We removed or re-worded items that were commonly misinterpreted, difficult to rate, or not well-defined. We added items based on evaluation or research. We removed the designated “Prevalent” notation on some items, which were more common but not shown to have any special relationship to graduation rates. We aimed for four to five items per objective for simplicity and validity. We defined key terms in the text and removed most footnotes.