Developed by Anton Lawson

| Purpose | To measure concrete and formal operational reasoning. |

|---|---|

| Format | Pre/post, Multiple-choice |

| Duration | 30 min |

| Focus | Scientific reasoning (proportional thinking, probabilistic thinking, correlational thinking, hypothetico-deductive reasoning) |

| Level | Intro college, High school, Middle school |

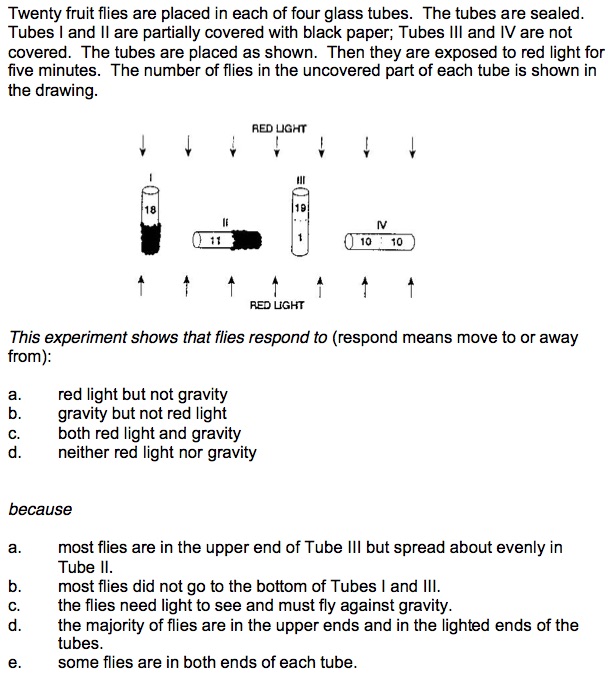

Sample questions from the CTSR:

CTSR Implementation and Troubleshooting Guide

Everything you need to know about implementing the CTSR in your class.

Login or register to download the implementation guide.

more details

This is the highest level of research validation, corresponding to all seven of the validation categories below.

Research Validation Summary

Based on Research Into:

- Student thinking

Studied Using:

- Student interviews

- Expert review

- Appropriate statistical analysis

Research Conducted:

- At multiple institutions

- By multiple research groups

- Peer-reviewed publication

The multiple-choice questions on the CTSR (Lawson Test) were developed originally as instructor demonstrations with associated multiple-choice questions, which also asked students for their reasoning. These demonstration questions were based on previous research on student reasoning. The CTSR was given to over 500 middle and high school students. A subset of students was interviewed to ensure the whole classroom test was comparable to an individual test. Appropriate analysis of reliability and difficulty was conducted, and reasonable values found. The CTSR also underwent expert review. Correlations were calculated between CTSR score and other measures of reasoning, and adequate values found. The developers also conducted a principal component analysis to identify questions that grouped together in factors, using student responses. They found three factors: “formal reasoning”, “concrete reasoning” and “early formal reasoning”. The Lawson test has been given to several thousands of students and results published in over 14 papers.

References

- L. Bao, T. Cai, K. Koenig, K. Fang, J. Han, J. Wang, Q. Liu, L. Ding, L. Cui, Y. Luo, Y. Wang, L. Li, and N. Wu, Learning and Scientific Reasoning, Science 323 (5914), 586 (2009).

- L. Bao, K. Fang, T. Cai, J. Wang, L. Yang, L. Cui, J. Han, L. Ding, and Y. Luo, Learning of content knowledge and development of scientific reasoning ability: A cross culture comparison, Am. J. Phys. 77 (12), 1118 (2009).

- L. Bao, Y. Xiao, K. Koenig, and J. Han, Validity evaluation of the Lawson classroom test of scientific reasoning, Phys. Rev. Phys. Educ. Res. 14 (2), 020106 (2018).

- V. Coletta and J. Phillips, Interpreting FCI scores: Normalized gain, preinstruction scores, and scientific reasoning ability, Am. J. Phys. 73 (11), 1172 (2005).

- V. Coletta and J. Phillips, Addressing Barriers to Conceptual Understanding in IE Physics Classes, presented at the Physics Education Research Conference 2009, Ann Arbor, Michigan, 2009.

- V. Coletta, J. Phillips, and J. Steinert, FCI normalized gain, scientific reasoning ability, thinking in physics, and gender effects, presented at the Physics Education Research Conference 2011, Omaha, Nebraska, 2011.

- V. Coletta, J. Phillips, and J. Steinert, Why You Should Measure Your Students' Reasoning Ability, Phys. Teach. 45 (4), 235 (2007).

- K. Diff and N. Tache, From FCI To CSEM To Lawson Test: A Report On Data Collected At A Community College, presented at the Physics Education Research Conference 2007, Greensboro, NC, 2007.

- L. Ding, Detecting Progression of Scientific Reasoning among University Science and Engineering Students, presented at the Physics Education Research Conference 2013, Portland, OR, 2013.

- L. Ding, X. Wei, and X. Liu, Variations in University Students’ Scientific Reasoning Skills Across Majors, Years, and Types of Institutions, Res. Sci. Educ. 46 (5), 613 (2016).

- L. Ding, X. Wei, and K. Mollohan, Does Higher Education Improve Student Scientific Reasoning Skills?, Int. J. Sci. Math. Educ. 14 (4), 619 (2014).

- C. Fabby and K. Koenig, Relationship of Scientific Reasoning to Solving Different Physics Problem Types, presented at the Physics Education Research Conference 2013, Portland, OR, 2013.

- J. Jensen, S. Neeley-Tass, J. Hatch, and T. Piorczynski, Learning Scientific Reasoning Skills May Be Key to Retention in Science, Technology, Engineering, and Mathematics, J. Coll. Stud. Ret. 19 (2), 126 (2015).

- A. Lawson, The development and validation of a classroom test of formal reasoning, J. Res. Sci. Teaching 15 (1), 11 (1978).

- A. Lawson, The Nature and Development of Scientific Reasoning: A Synthetic View, Int. J. Sci. Math. Educ. 2 (3), 307 (2005).

- A. Lawson, B. Clark, E. Cramer-Meldrum, K. Falconer, J. Sequist, and Y. Kwon, Development of Scientific Reasoning in College Biology: Do Two Levels of General Hypothesis-Testing Skills Exist?, J. Res. Sci. Teaching 37 (1), 81 (2000).

- D. Maloney, Comparative reasoning abilities of college students, Am. J. Phys. 49 (8), 784 (1981).

- J. Moore and L. Rubbo, Scientific reasoning abilities of nonscience majors in physics-based courses, Phys. Rev. ST Phys. Educ. Res. 8 (1), 010106 (2012).

- P. Nieminen, A. Savinainen, and J. Viiri, Relations between representational consistency, conceptual understanding of the force concept, and scientific reasoning, Phys. Rev. ST Phys. Educ. Res. 8 (1), 010123 (2012).

- B. Pyper, Changing Scientific Reasoning and Conceptual Understanding in College Students, presented at the Physics Education Research Conference 2011, Omaha, Nebraska, 2011.

- M. Semak, R. Dietz, R. Pearson, and C. Willis, Predicting FCI gain with a nonverbal intelligence test, presented at the Physics Education Research Conference 2012, Philadelphia, PA, 2012.

- A. Stammen, K. Malone, and K. Irving, Effects of Modeling Instruction Professional Development on Biology Teachers’ Scientific Reasoning Skills, Educ. Sci. 8 (3), 119 (2018).

- S. Westbrook and L. Rogers, Examining the development of scientific reasoning in ninth-grade physical science students, J. Res. Sci. Teaching 31 (1), 65 (2006).

- Y. Xiao, J. Han, K. Koenig, J. Xiong, and L. Bao, Multilevel Rasch modeling of two-tier multiple choice test: A case study using Lawson’s classroom test of scientific reasoning, Phys. Rev. Phys. Educ. Res. 14 (2), 020104 (2018).

PhysPort provides translations of assessments as a service to our users, but does not endorse the accuracy or validity of translations. Assessments validated for one language and culture may not be valid for other languages and cultures.

| Language | Translator(s) | |

|---|---|---|

| Arabic | Al-Zaghal |  |

| Chinese | Yajun Wei |  |

| Serbian | Branka Radulović |  |

| Spanish | Genaro Zavala and PERIG (Physics Education Research and Innovation Group) |  |

| Swedish | Joel Andreas Ozolins |  |

If you know of a translation that we don't have yet, or if you would like to translate this assessment, please contact us!

Login or register to download the answer key and an excel scoring and analysis tool for this assessment.

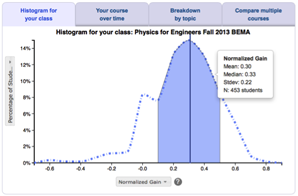

Score the CTSR on the PhysPort Data Explorer

With one click, you get a comprehensive analysis of your results. You can:

- Examine your most recent results

- Chart your progress over time

- Breakdown any assessment by question or cluster

- Compare between courses

| Typical Results | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

The following are results from the original study, Lawson, 1978, using the test (which was the multiple-choice plus open-ended explanation version, but because the multiple choice options were based on responses from this study, results can be expected to be similar):

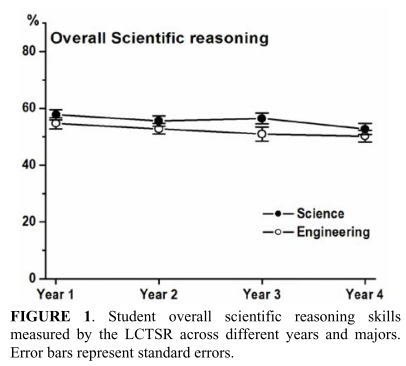

*The 9th graders were from a different school, which was of higher economic status Typical results from Ding 2013 presenting CTSR scores from science and engineering students across the four years of higher education at two Chinese universities.

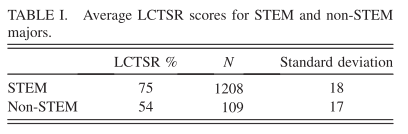

Typical pre-test CTSR results from Moore and Rubbo 2012 comparing science and non-science majors:

|

The latest version of the CTSR, released in 2000, is version 2. This is a revised version of the original multiple-choice version, released in 1978, which had 15 questions which asked students to choose the correct answer and explain their reasoning. Originally the assessment required the teacher to do demonstrations to ask the questions. The latest version of the assessment is multiple-choice, where the choices were based on students’ open-ended responses, making it nearly as reliable and much easier to administer and grade.