Peer Instruction

Frequently asked questions

Other sites with lists of Frequently Asked Questions about Peer Instruction:

- Carl Wieman Science Education Initiative Clicker Resource Guide: Pages 20-27 answer Frequently Asked Questions About the Use of Clickers and Clicker Questions.

- The 6 most common questions about using Peer Instruction, answered: from the official Peer Instruction blog.

- Peer Instruction FAQs: from Learning Catalytics, a technology for implementing Peer Instruction.

More Frequently Asked Questions:

Which polling method should I use?There are at least three methods of collecting students’ answers to questions in Peer Instruction: clickers, flashcards, and show of hands. Lasry 2008 found that when clickers and flashcards are used in a similar way, there is no difference in learning gains between the two systems. However, different polling methods make some instructional practices easier or harder, and there are advantages and disadvantages to each. There are a few blog posts with discussions of the pros and cons of different polling methods from Derek Bruff, Andy Rundquist,

Which polling method should I use?There are at least three methods of collecting students’ answers to questions in Peer Instruction: clickers, flashcards, and show of hands. Lasry 2008 found that when clickers and flashcards are used in a similar way, there is no difference in learning gains between the two systems. However, different polling methods make some instructional practices easier or harder, and there are advantages and disadvantages to each. There are a few blog posts with discussions of the pros and cons of different polling methods from Derek Bruff, Andy Rundquist,

- Clickers/personal response systems: This is rapidly becoming the most popular polling method for Peer Instruction. In addition to commercial clicker systems, there are also methods of using students’ personal cell phones or laptops to submit responses.

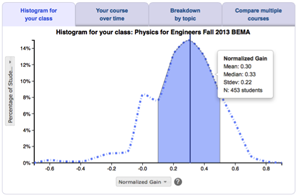

Advantages:- Clicker systems record exactly how many students gave each response and can instantly create a histogram to give a visual summary of the responses to the instructor and students.

- Data from clickers can be stored to be used for later formative assessment, grading, or research purposes.

- Student responses are anonymous to other students – they cannot see what answers other students select so they are less likely to be swayed by peer pressure or feel anxiety about selecting a wrong answer.

- Student responses appear anonymous to the instructor, which may lead to less anxiety about selecting a wrong answer.

- Clickers require time and effort to set up and may have technical problems.

- Clickers are more expensive than the other methods listed below. This expense may be born by the department or by the students.

- If students forget their clickers or have technical problems, they cannot participate in voting.

- Flashcards: Each student has a set of 5 colored cards with each color corresponding to a multiple choice answer. Students hold up the card corresponding to the answer they select in front of their chest so that the instructor can see it but other students can’t. A variation is having cards or sheets of paper with letters written on them. Colors are preferable because they make it much easier for an instructor to get a visual sense of the prevalence of each answer by a quick scan of the room.

Advantages:- Cheaper than clickers

- Faster and easier to set up than clickers and less prone to technical problems

- If students are instructed to hold the cards to their chests and not look around the room when they answer, they are anonymous to other students, so that students are less likely to be swayed by peer pressure or feel anxiety about selecting a wrong answer.

- Unlike clickers, they are NOT anonymous to the instructor, which may encourage students to take their answers more seriously.

- If an instructor wants students to get a sense of how other students answered after all students have voted, they can ask students to hold up their cards and look around the room.

- Results are not stored for future use for formative assessment, grading, or research.

- No way to display results to all students without students who answered wrong standing out.

- Requires time to distribute cards in large classes.

- The lack of anonymity may lead some students to be reluctant to answer.

- Show of hands: The instructor asks students who selected answer A to raise their hands, then those who selected answer B, etc.

Advantages:- Easiest method to implement, requiring no time to set up or distribute cards or clickers.

- Free

- Requires more time for each question because the instructor must ask for students to raise hands for each option sequentially, rather than collecting all responses at once.

- Not anonymous to other students or to the instructor, so students may be embarrassed if they chose an unpopular answer and may be inclined to change their answer on the spot if they see that many students chose a different answer.

- Results are not stored for future use for formative assessment, grading, or research.

- No way to see a summary of all responses at once.

- Clickers/personal response systems: This is rapidly becoming the most popular polling method for Peer Instruction. In addition to commercial clicker systems, there are also methods of using students’ personal cell phones or laptops to submit responses.

Should I grade ConcepTests?If you use clickers, it is possible to grade student responses, either for completion or for correctness.

Should I grade ConcepTests?If you use clickers, it is possible to grade student responses, either for completion or for correctness.

Most advocates of peer instruction suggest that student responses to in-class questions count for some small percentage of their grade (2-15%) to encourage participation, attendance, bringing clickers to class, and taking clicker questions seriously. (However, for a dissenting view, see this blog post by Joss Ives.)

Most advocates suggest grading only for participation, not for the correct answer, in order to emphasize that the goal of clicker questions is to help students learn, not to evaluate them. Research supports this view: James 2006 audio-recorded student conversations in two introductory astronomy classrooms with different grading techniques for Peer Instruction questions. They summarize their results as follows:"In the high stakes classroom where students received little credit for incorrect CRS responses, it was found that conversation partners with greater knowledge tended to dominate peer discussions and partners with less knowledge were more passive. In the low stakes classroom where students received full credit for incorrect responses, it was found that students engaged in a more even examination of ideas from both partners. Conversation partners in the low stakes classroom were also far more likely to register dissimilar responses, suggesting that question response statistics in low stakes classrooms more accurately reflect current student understanding and therefore act as a better diagnostic tool for instructors."

If you do grade clicker questions for correctness, it's important to grade only those questions for which students can reasonably be expected to know the answer. Thus, questions that are intended to introduce students to a new topic, elicit student thinking, or help students engage with ambiguous ideas should not be graded. Can I use Peer Instruction in small classes?Peer Instruction is often used in large classes because there are not many other ways to be sure of engaging every student in a large class. Instructors may be reluctant to use Peer Instruction in a small class because it seems artificial in an environment where you know all your students and it is possible to engage them in other ways. However, Peer Instruction has many benefits even in small classes. Joss Ives, in his blog post Why I use clickers in small courses, says:

Can I use Peer Instruction in small classes?Peer Instruction is often used in large classes because there are not many other ways to be sure of engaging every student in a large class. Instructors may be reluctant to use Peer Instruction in a small class because it seems artificial in an environment where you know all your students and it is possible to engage them in other ways. However, Peer Instruction has many benefits even in small classes. Joss Ives, in his blog post Why I use clickers in small courses, says:"Even in a class of 10, I find that there are usually some students that often do not feel comfortable discussing their understanding with the entire class. The clickers facilitate the argumentation process for these students in a smaller-group situation in which these students feel more comfortable, but are still help accountable for their answers. They help establish a culture where on most questions each student is going to be discussing their understanding with their peers. Clickers are not the only way to accomplish this, but are the way I do it."

Can I use Peer Instruction in upper-division classes?Peer Instruction was originally developed for introductory physics classes, but it can also be used in upper-division classes. The University of Colorado has implemented Peer Instruction in their upper-division E&M and Quantum Mechanics courses, and found that it is effective for student learning (Chasteen and Pollock 2009), and both instructors (Pollock, Chasteen, Dubson, and Perkins 2010) and students (Perkins and Turpen 2009) value it.

Can I use Peer Instruction in upper-division classes?Peer Instruction was originally developed for introductory physics classes, but it can also be used in upper-division classes. The University of Colorado has implemented Peer Instruction in their upper-division E&M and Quantum Mechanics courses, and found that it is effective for student learning (Chasteen and Pollock 2009), and both instructors (Pollock, Chasteen, Dubson, and Perkins 2010) and students (Perkins and Turpen 2009) value it.

Stephanie Chasteen, one of the leaders in the implementation of Peer Instruction at CU, describes how it works in several blog posts (part 1: What does it look like?, part 2: What kinds of questions do we ask?, part 3: The critics speak, and part 4: Tips for success) and several videos (Upper Division Clickers in Action, What Kinds of Questions Do We Ask in Upper Division?, and Writing Upper Division Clicker Questions).

References

- S. Chasteen and S. Pollock, Tapping into Juniors’ Understanding of E&M: The Colorado Upper-Division Electrostatics (CUE) Diagnostic, presented at the Physics Education Research Conference 2009, Ann Arbor, Michigan, 2009.

- M. James, The effect of grading incentive on student discourse in Peer Instruction, Am. J. Phys. 74 (8), 689 (2006).

- N. Lasry, Clickers or Flashcards: Is There Really a Difference?, Am. J. Phys. 46 (4), 242 (2008).

- K. Perkins and C. Turpen, Student Perspectives on Using Clickers in Upper-division Physics Courses, presented at the Physics Education Research Conference 2009, Ann Arbor, Michigan, 2009.

- S. Pollock, S. Chasteen, M. Dubson, and K. Perkins, The use of concept tests and peer instruction in upper-division physics, presented at the Physics Education Research Conference 2010, Portland, Oregon, 2010.